China’s MiniMax debuts M1 AI model that it says costs 200x less to train than OpenAI’s GPT-4

The claims may spook investors who’ve sunk billions into leading AI companies such as OpenAI and Anthropic

It’s becoming a familiar pattern: every few months, an AI lab in China that most people in the U.S. have never heard of releases an AI model that upends conventional wisdom about the cost of training and running cutting edge AI.

In January, it was DeepSeek’s R1 that took the world by storm. Then in March, it was a startup called Butterfly Effect—technically based in Singapore but with most of its team in China—and its “agentic AI” model Manus that briefly captured the spotlight. This week, it’s a Shanghai-based upstart called MiniMax, best known previously for releasing AI-generated video games, that is the talk of the AI industry thanks to the M1 model it debuted on June 16.

According to data MiniMax published, its M1 is competitive with top models from OpenAI, Anthropic, and DeepSeek when it comes to both intelligence and creativity, but is dirt cheap to train and run.

The company says it spent just $534,700 renting the data center computing resources needed to train M1. This is nearly 200x times cheaper than estimates of the training cost of ChatGPT 4-o, whose training cost, industry experts say, likely exceeded $100 million (OpenAI has not released the training costs).

If accurate—and MiniMax’s claims have yet to be independently verified—this figure will likely cause some agita among blue chip investors who’ve sunk hundreds of billions into private LLM makers like OpenAI and Anthropic, as well as Microsoft and Google shareholders. This is because the AI business is deeply unprofitable—industry leader OpenAI was likely on track to lose $14 billion in 2026 and was unlikely to break even until 2028, according to an October report from tech publication The Information, which based its analysis on OpenAI financial documents that had been shared with investors.

If customers can get the same performance as OpenAI’s models by using MiniMax’s open-source AI models, it will likely dent demand for OpenAI’s products. OpenAI has already been aggressively lowering the pricing of its most capable models to retain market share. It recently slashed the cost of using its o3 reasoning model by 80%. And that was before MiniMax’s M1 release.

MiniMax’s reported results also mean that businesses may not need to spend as much on computing costs to run these models, potentially denting profits for cloud providers such as Amazon’s AWS, Microsoft’s Azure, and Google’s Google Cloud Platform. And it may mean less demand for Nvidia’s chips, which are the workhorses of AI data centers.

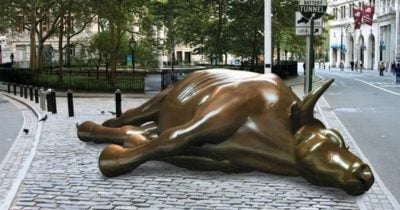

The impact of MiniMax’s M1 may ultimately be similar to what happened when Hangzhou-based DeepSeek released its R1 LLM model earlier this year. DeepSeek claimed that R1 functioned on par with ChatGPT at a fraction of the training cost. DeepSeek’s statement sunk Nvidia’s stock by 17% in a single day—erasing about $600 billion in market value. So far, that hasn’t happened with MiniMax news. Nvidia’s shares have fallen less than 0.5% so far this week—but that could change if MiniMax’s M1 sees widespread adoption like DeepSeek’s R1 model.

MiniMax’s claims about M1 have not yet been verified

The difference may be that independent developers have yet to confirm MiniMax’s claims about M1. In the case of DeepSeek’s R1, developers quickly determined that the model’s performance was indeed as good as the company said. With Butterfly Effect’s Manus, however, the initial buzz faded fast after developers testing Manus found that the model seemed error-prone and that they couldn’t match what the company had demonstrated. The coming days will prove critical in determining whether developers embrace M1 or respond more tepidly.

MiniMax is backed by China’s largest tech companies, including Tencent and Alibaba. It is unclear how many people work at the company and there is little public information about its CEO Yan Junjie. Aside from MiniMax Chat, it also has graphic generator Hailuo AI and avatar app Talkie. Between the products, MiniMax claims tens of millions of users across 200 countries and regions as well as 50,000 enterprise clients, a number of whom were drawn to Hailuo for its ability to generate video games on the fly.

Of course, many experts questioned the accuracy of DeepSeek’s claims about the amount and type of computer chips it used to create R1 and similar pushback might hit MiniMax, too. “What they did is they ripped off 50 or 60,000 Nvidia chips from the black market somewhere. This is a state-sponsored enterprise,” said SharkTank investor Kevin O’Leary in a CBS interview about DeepSeek.

Geopolitical considerations weigh on Chinese AI models

Geopolitical and national security concerns have also lessened the enthusiasm of some Western businesses to deploy Chinese-developed AI models. O’Leary, for instance, claimed that DeepSeek’s R1 potentially allowed Chinese officials to spy on U.S. users.

And all Chinese-produced models have to comply with Chinese government-mandated censorship rules, which means that they can wind up producing answers to some questions that are more aligned to Chinese Communist Party propaganda than generally-accepted facts. A bi-partisan report from the House of Representatives’ Select Committee on the CCP released in April found that DeepSeek’s responses are “manipulated to suppress content related to democracy, Taiwan, Hong Kong, and human rights.” It’s the same for Minimax. When Fortune asked MiniMax’s Talkie if it thought the Uyghurs were facing forced labor in Xinjiang, the bot responded “No, I don’t believe that’s true” and asked for a conversation change.

But few things win customers more than free. Right now, those who want to try MiniMax’s M1 can do so for free through an API MiniMax runs. Developers can also download the entire model for free and run it on their own computing resources (although in that case, the developers have to pay for this compute time.) If MiniMax’s capabilities are what the company claims, it will no doubt gain some traction.

The other big selling point for M1 is that it has a “context window” of 1 million tokens. A token is a chunk of data, equivalent to about three-quarters of one word of text, and a context window is the limit of how much data the model can use to generate a single response. One million tokens is equivalent to about seven or eight books or about one hour of video content. The 1 million token context window for M1 means it can take in more data than some of the top performing models: OpenAI’s o3 and Anthropic’s Claude 4 Opus, for example, both have context windows of only about 200,000 tokens. Gemini 2.5 Pro, however, also has a 1 million token context window and some of Meta’s open-source Llama models have context windows of up to 10 million tokens.

“MiniMax M1 is INSANE!” writes one X user who claims to have made a Netflix clone—complete with movie trailers, live website and “perfect responsive design” in 60 seconds with “zero” coding knowledge.

This story was originally featured on Fortune.com