Meta sues nudify app Crush AI

Meta has taken legal action against the advertisers behind a popular deepfake porn app, as the company recommits to its ad policies.

Meta has struck out against a popular app used to produce AI-generated nonconsensual intimate images — commonly referred to as "nudify" or "undress" apps — as the company selectively cracks down on advertisers.

In a new lawsuit filed in Hong Kong against the makers behind a commonly-used app known as Crush AI, the tech giant claims parent company Joy Timeline HK intentionally bypassed Meta's ad review process using new domain names and networks of advertiser accounts in order to promote the app's AI-powered deepfake services.

"This legal action underscores both the seriousness with which we take this abuse and our commitment to doing all we can to protect our community from it. We’ll continue to take the necessary steps — which could include legal action — against those who abuse our platforms like this," Meta wrote in a press release.

Meta has previously been under fire for failing to curb nudify apps from advertising on its platform, including allowing ads featuring explicit deepfake images of celebrities to appear repeatedly on the platform — in addition to its advertising policies, Meta prohibits the spread of non-consensual intimate imagery and blocks the search terms "nudify," "undress" and "delete clothing." According to an analysis by Cornell researcher Alexios Mantzarlis, Crush AI allegedly ran more than 8,000 ads across Meta platforms between the fall of 2024 and January 2025, with 90 percent of its traffic coming from Meta platforms. Broadly, AI-generated ad content has plagued users, as the company has relaxed its content moderation policies in favor of automated review processes and community-generated fact-checking.

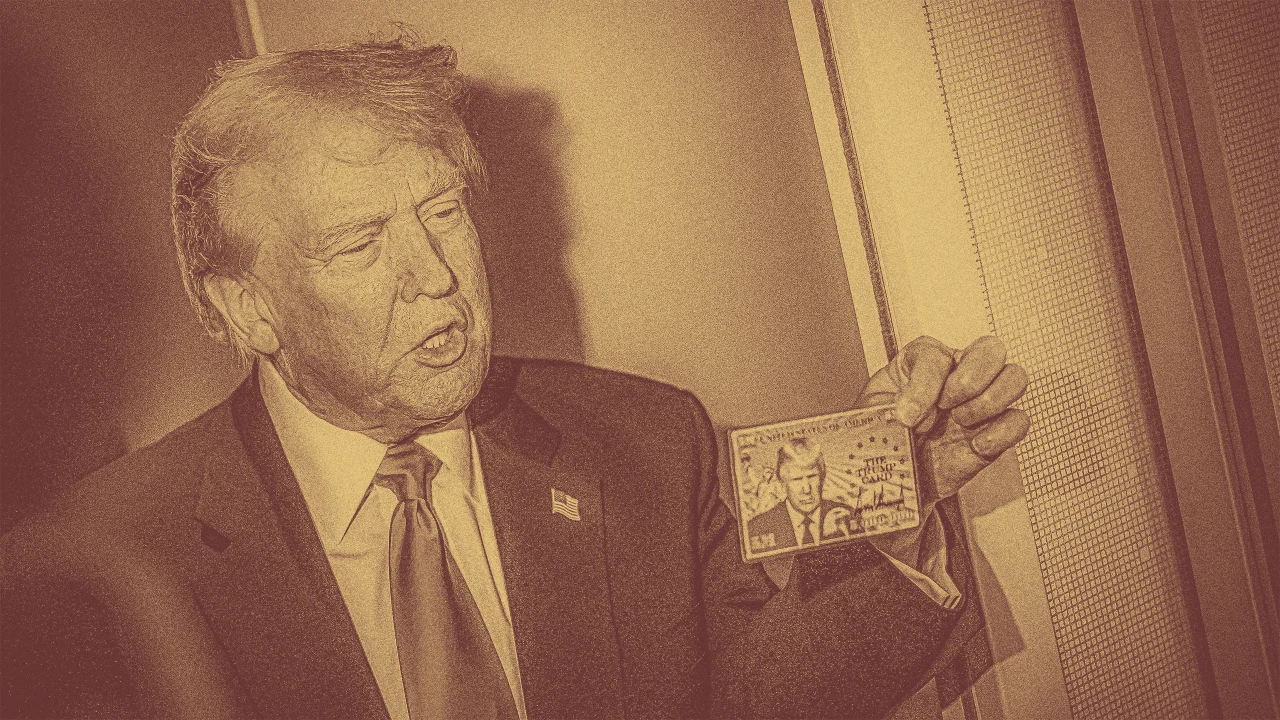

Victims of AI-generated nonconsensual intimate imagery have spent years fighting for greater industry regulation and legal pathways for recourse. In May, the Trump Administration signed the Take It Down Act, a law that criminalizes nonconsensual intimate imagery and sets mandatory takedown policies for online platforms. AI-generated child sexual abuse material (CSAM) has also proliferated across the internet in recent years, prompting widespread concern about the safety and regulation of generative AI tools.

In addition to taking legal action against Crush AI, Meta announced it was developing a new detection technology to more accurately flag and remove ads for nudify apps. The company is also stepping up its work with the Tech Coalition’s Lantern program, an industry initiative to coalesce information on child online safety, and will continue sharing information on violating companies and products. Since March, Meta has reported more than 3,800 unique URLs related to nudify apps and websites and discovered four separate networks trying to promote their services, according to the company.

![X Highlights Back-To-School Marketing Opportunities [Infographic]](https://imgproxy.divecdn.com/dM1TxaOzbLu_kb9YjLpd7P_E_B_FkFsuKp2uSGPS5i8/g:ce/rs:fit:770:435/Z3M6Ly9kaXZlc2l0ZS1zdG9yYWdlL2RpdmVpbWFnZS94X2JhY2tfdG9fc2Nob29sMi5wbmc=.webp)