Everyone’s using AI at work. Here’s how companies can keep data safe

As enterprises roll out AI tools across the workforce, business leaders are learning the best ways to mitigate security issues.

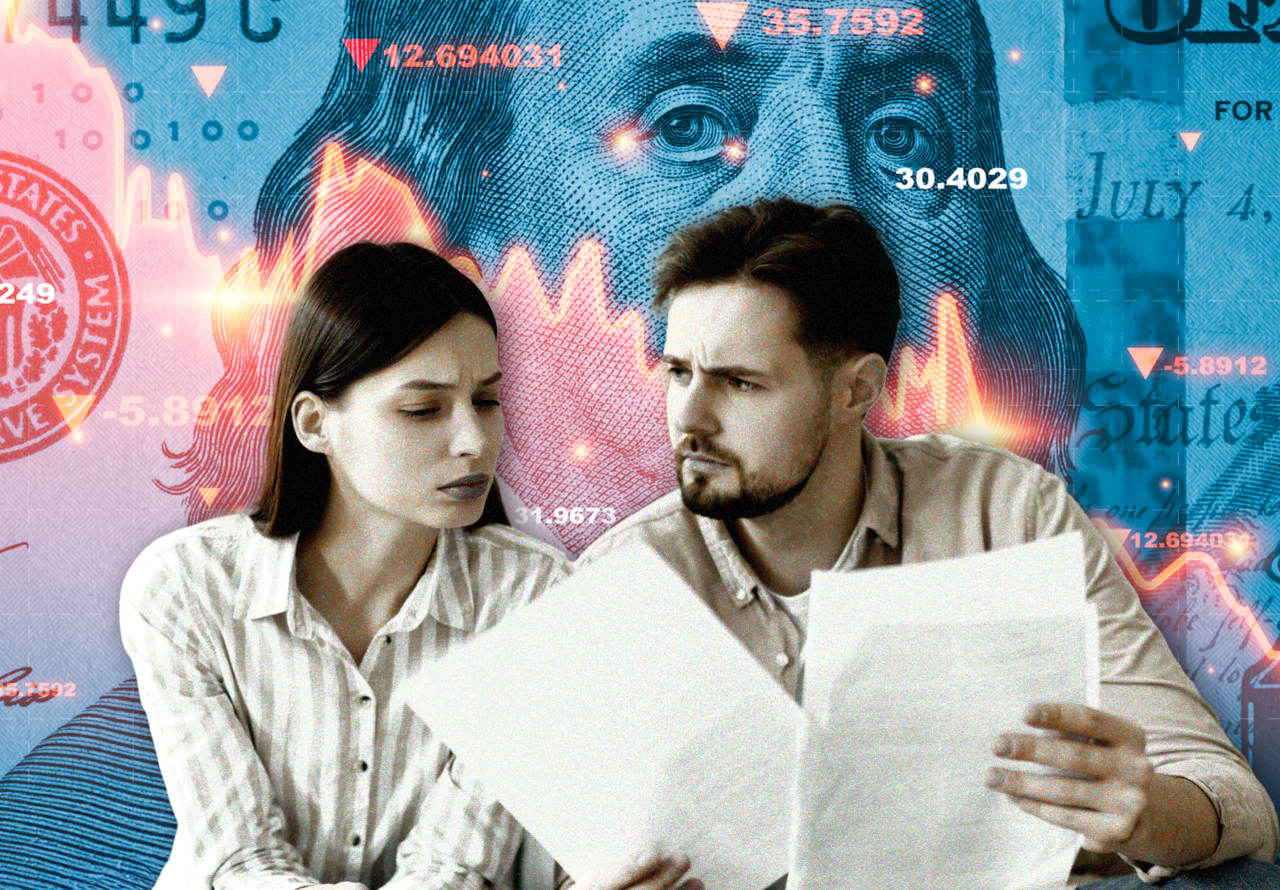

Companies across industries are encouraging their employees to use AI tools at work. Their workers, meanwhile, are often all too eager to make the most of generative AI chatbots like ChatGPT. So far, everyone is on the same page, right?

There’s just one hitch: How do companies protect sensitive company data from being hoovered up by the same tools that are supposed to boost productivity and ROI? After all, it’s all too tempting to upload financial information, client data, proprietary code, or internal documents into your favorite chatbot or AI coding tool, in order to get the quick results you want (or that your boss or colleague might be demanding). In fact, a new study from data security company Varonis found that shadow AI—unsanctioned generative AI applications—poses a significant threat to data security, with tools that can bypass corporate governance and IT oversight, leading to potential data leaks. The study found that nearly all companies have employees using unsanctioned apps, and nearly half have employees using AI applications considered high-risk.

For information security leaders, one of the key challenges is educating workers about what the risks are and what the company requires. They must ensure that employees understand the types of data the organization handles—ranging from corporate data like internal documents, strategic plans, and financial records, to customer data such as names, email addresses, payment details, and usage patterns. It’s also critical to communicate how each type of data is classified—for example, whether it is public, internal-only, confidential, or highly restricted. Once this foundation is in place, clear policies and access boundaries must be established to protect that data accordingly.

Striking a balance between encouraging AI use and building guardrails

“What we have is not a technology problem, but a user challenge,” said James Robinson, chief information security officer at data security company Netskope. The goal, he explained, is to ensure that employees use generative AI tools safely—without discouraging them from adopting approved technologies.

“We need to understand what the business is trying to achieve,” he added. Rather than simply telling employees they’re doing something wrong, security teams should work to understand how people are using the tools, to make sure the policies are the right fit—or whether they need to be adjusted to allow employees to share information appropriately.

Jacob DePriest, chief information security officer at password protection provider 1Password, agreed, saying that his company is trying to strike a balance with its policies—to both encourage AI usage and also educate so that the right guardrails are in place.

Sometimes that means making adjustments. For example, the company released a policy on the acceptable use of AI last year, part of the company’s annual security training. “Generally, it’s this theme of ‘Please use AI responsibly; please focus on approved tools; and here are some unacceptable areas of usage.’” But the way it was written caused many employees to be overly cautious, he said.

“It’s a good problem to have, but CISOs can’t just focus exclusively on security,” he said. “We have to understand business goals and then help the company achieve both business goals and security outcomes as well. I think AI technology in the last decade has highlighted the need for that balance. And so we’ve really tried to approach this hand in hand between security and enabling productivity.”

Banning AI tools to avoid misuse does not work

But companies who think banning certain tools is a solution, should think again. Brooke Johnson, SVP of HR and security at Ivanti, said her company found that among people who use generative AI at work, nearly a third keep their AI use completely hidden from management. “They’re sharing company data with systems nobody vetted, running requests through platforms with unclear data policies, and potentially exposing sensitive information,” she said in a message.

The instinct to ban certain tools is understandable but misguided, she said. “You don’t want employees to get better at hiding AI use; you want them to be transparent so it can be monitored and regulated,” she explained. That means accepting the reality that AI use is happening regardless of policy, and conducting a proper assessment of which AI platforms meet your security standards.

“Educate teams about specific risks without vague warnings,” she said. Help them understand why certain guardrails exist, she suggested, while emphasizing that it is not punitive. “It’s about ensuring they can do their jobs efficiently, effectively, and safely.”

Agentic AI will create new challenges for data security

Think securing data in the age of AI is complicated now? AI agents will up the ante, said DePriest.

“To operate effectively, these agents need access to credentials, tokens, and identities, and they can act on behalf of an individual—maybe they have their own identity,” he said. “For instance, we don’t want to facilitate a situation where an employee might cede decision-making authority over to an AI agent, where it could impact a human.” Organizations want tools to help facilitate faster learning and synthesize data more quickly, but ultimately, humans need to be able to make the critical decisions, he explained.

Whether it is the AI agents of the future or the generative AI tools of today, striking the right balance between enabling productivity gains and doing so in a secure, responsible way may be tricky. But experts say every company is facing the same challenge—and meeting it is going to be the best way to ride the AI wave. The risks are real, but with the right mix of education, transparency, and oversight, companies can harness AI’s power—without handing over the keys to their kingdom.

This story was originally featured on Fortune.com