‘We are past the event horizon’: Sam Altman thinks superintelligence is within our grasp and makes 3 bold predictions for the future of AI and robotics

New blog post from OpenAI CEO Sam Altman talks about a future with humanoid robots, AGI, superintelligence and job losses.

- Sam Altman says humanity is “close to building digital superintelligence”

- Intelligent robots that can build other robots “aren’t that far off”

- He sees “whole classes of jobs going away” but “capabilities will go up equally quickly, and we’ll all get better stuff”

In a long blog post, OpenAI CEO Sam Altman has set out his vision of the future and reveals how artificial general intelligence (AGI) is now inevitable and about to change the world.

In what could be viewed as an attempt to explain why we haven’t achieved AGI quite yet, Altman seems at pains to stress that the progress of AI as a gentle curve rather than a rapid acceleration, but that we are now “past the event horizon” and that “when we look back in a few decades, the gradual changes will have amounted to something big.”

“From a relativistic perspective, the singularity happens bit by bit", writes Altman, "and the merge happens slowly. We are climbing the long arc of exponential technological progress; it always looks vertical looking forward and flat going backwards, but it’s one smooth curve.“

But even with a more decelerated timeline, Altman is confident that we’re on our way to AGI, and predicts three ways it will shape the future:

1. Robotics

Of particular interest to Altman is the role that robotics are going to play in the future:

“2025 has seen the arrival of agents that can do real cognitive work; writing computer code will never be the same. 2026 will likely see the arrival of systems that can figure out novel insights. 2027 may see the arrival of robots that can do tasks in the real world.”

To do real tasks in the world, as Altman imagines, the robots would need to be humanoid, since our world is designed to be used by humans, after all.

Altman says “...robots that can build other robots … aren’t that far off. If we have to make the first million humanoid robots the old-fashioned way, but then they can operate the entire supply chain – digging and refining minerals, driving trucks, running factories, etc – to build more robots, which can build more chip fabrication facilities, data centers, etc, then the rate of progress will obviously be quite different.”

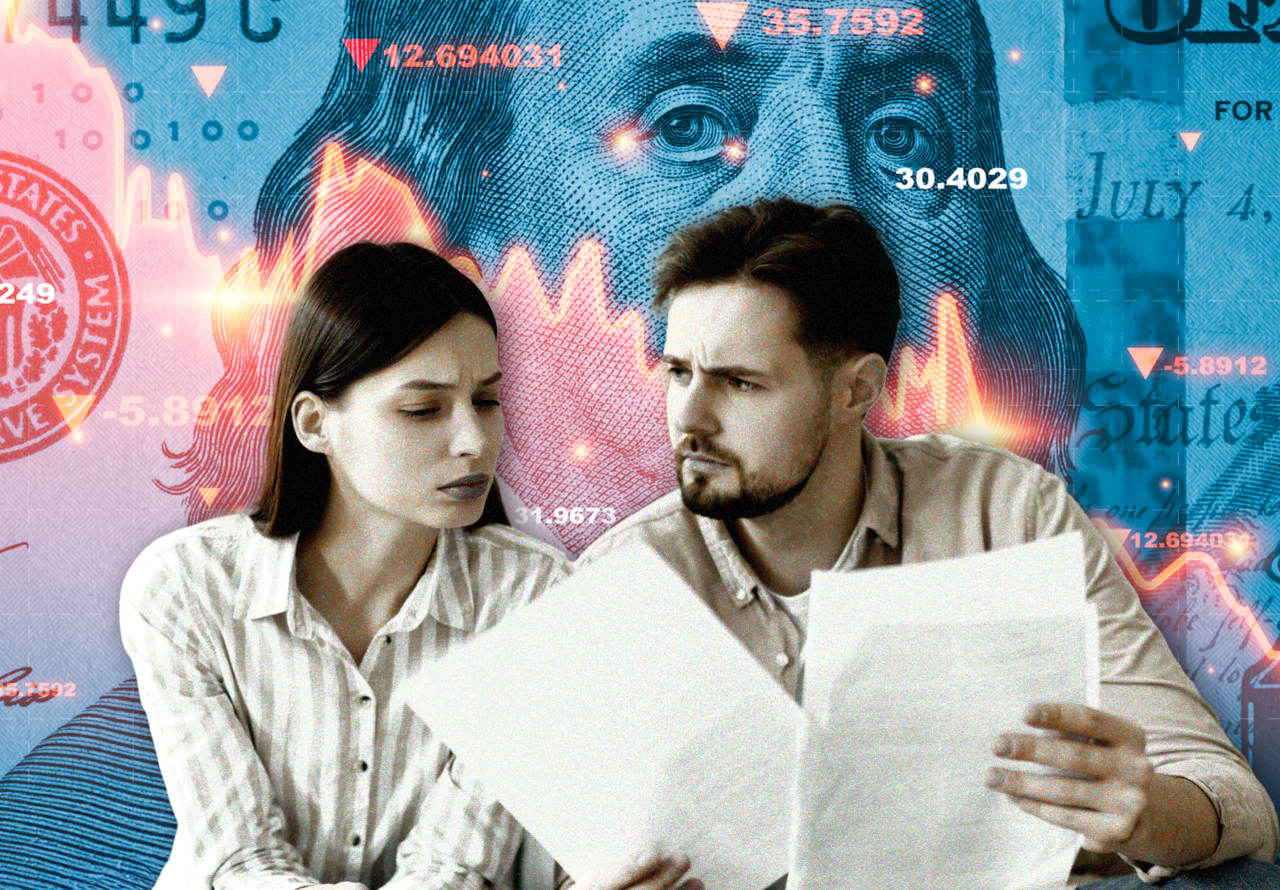

2. Job losses but also opportunities

Altman says society will have to change to adapt to AI, on the one hand through job losses, but also through increased opportunities:

“The rate of technological progress will keep accelerating, and it will continue to be the case that people are capable of adapting to almost anything. There will be very hard parts like whole classes of jobs going away, but on the other hand the world will be getting so much richer so quickly that we’ll be able to seriously entertain new policy ideas we never could before.”

Altman seems to balance the changing job landscape with the new opportunities that superintelligence will bring: “...maybe we will go from solving high-energy physics one year to beginning space colonization the next year; or from a major materials science breakthrough one year to true high-bandwidth brain-computer interfaces the next year.”

3. AGI will be cheap and widely available

In Altman’s bold new future, superintelligence will be cheap and widely available. When describing the best path forward, Altman first suggests we solve the “alignment problem”, which involves getting “...AI systems to learn and act towards what we collectively really want over the long-term”.

“Then [we need to] focus on making superintelligence cheap, widely available, and not too concentrated with any person, company, or country … Giving users a lot of freedom, within broad bounds society has to decide on, seems very important. The sooner the world can start a conversation about what these broad bounds are and how we define collective alignment, the better.”

It ain’t necessarily so

Reading Altman’s blog, there’s a kind of inevitability behind his prediction that humanity is marching uninterrupted towards AGI. It’s like he’s seen the future, and there’s no room for doubt in his vision, but is he right?

Altman’s vision stands in stark contrast to the recent paper from Apple that suggested we are a lot farther away from achieving AGI than many AI advocates would like.

“The illusion of thinking”, a new research paper from Apple, states that “despite their sophisticated self-reflection mechanisms learned through reinforcement learning, these models fail to develop generalizable problem-solving capabilities for planning tasks, with performance collapsing to zero beyond a certain complexity threshold.”

The research was conducted on Large Reasoning Models, like OpenAI’s o1/o3 models and Claude 3.7 Sonnet Thinking.

“Particularly concerning is the counterintuitive reduction in reasoning effort as problems approach critical complexity, suggesting an inherent compute scaling limit in LRMs. “, the paper says.

In contrast, Altman is convinced that “Intelligence too cheap to meter is well within grasp. This may sound crazy to say, but if we told you back in 2020 we were going to be where we are today, it probably sounded more crazy than our current predictions about 2030.”

As with all predictions about the future, we’ll find out if Altman is right soon enough.