Meta to replace human content moderators with AI tools

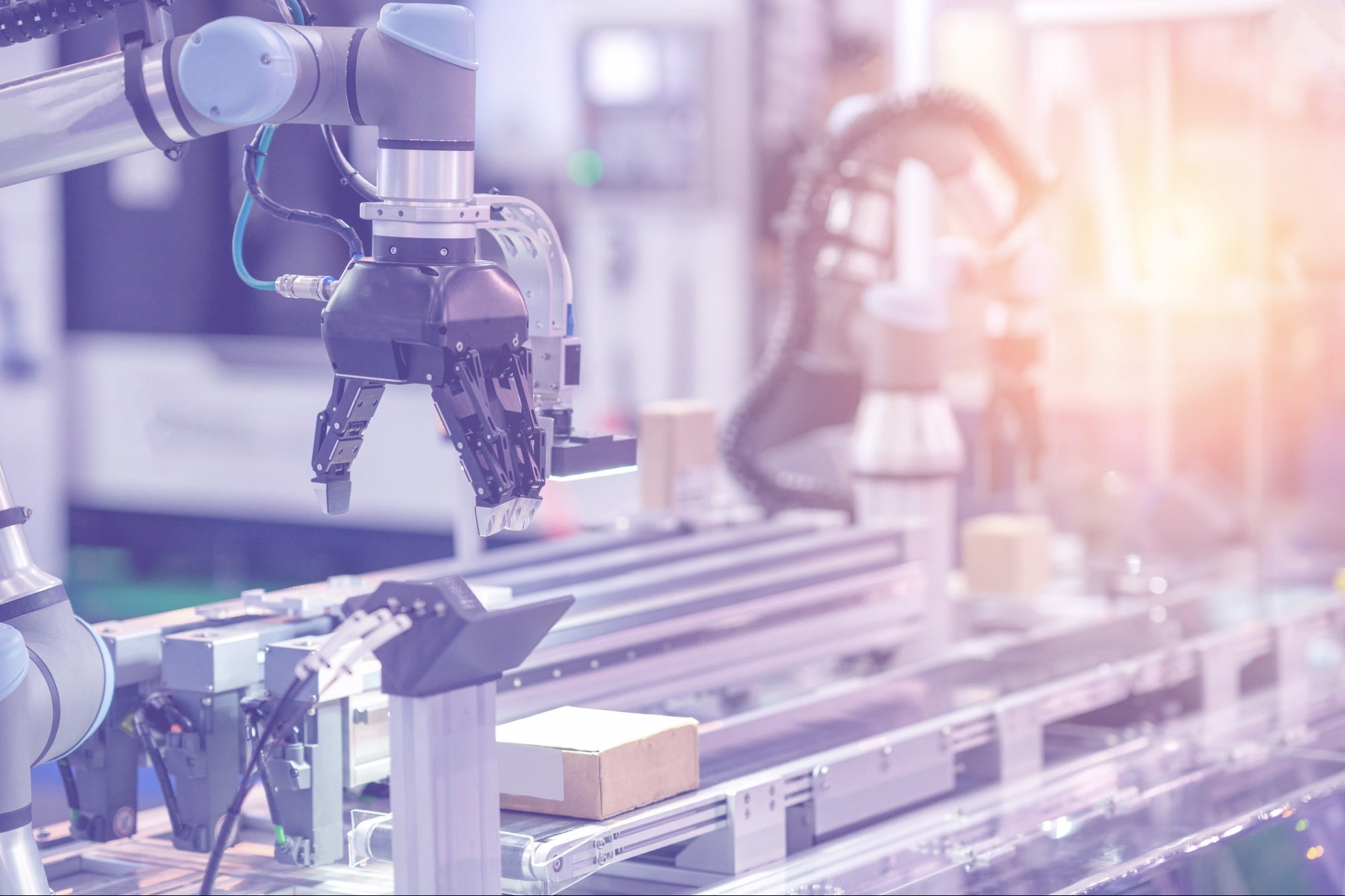

Meta plans to replace human content moderators with AI tools, sparking ethical, regulatory, and safety concerns.

Meta is reportedly planning a major restructuring of its content moderation strategy by replacing a significant portion of its human trust and safety staff with artificial intelligence tools. The move, which is part of Meta's cost-optimisation efforts, raises critical questions about the future of content moderation on large social platforms.

A shift toward automation

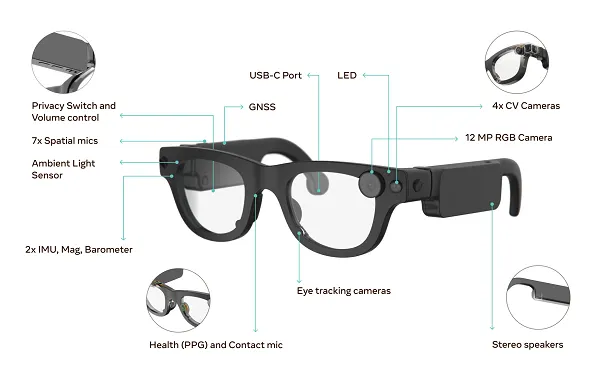

The company has been using AI to detect and filter harmful content for years, but internal reports suggest a more aggressive push is underway to reduce dependence on human moderators. Meta believes advanced AI models can now handle a larger volume of content with improved speed and consistency, especially across text, image, and video formats.

Concerns about nuance and bias

Critics argue that while AI can enhance efficiency, it lacks the human judgment required for complex moderation decisions. Cases involving hate speech, misinformation, or cultural sensitivity often require contextual understanding, which AI still struggles to provide. Over reliance on automated systems could lead to both over-censorship and under-enforcement.

The ethics of replacing humans

Beyond technical concerns, the move raises ethical questions around labor, transparency, and corporate accountability. Content moderators have long raised concerns about working conditions, but their role is also seen as vital to maintaining platform safety. Replacing them with AI may erode public trust, especially if moderation errors go unaddressed.

Industry trends and regulatory outlook

Meta’s decision comes as other tech giants explore similar strategies. However, regulators in Europe and the US are increasingly scrutinising how platforms manage harmful content. Automation could invite tighter oversight, particularly if AI-driven systems fail to meet transparency and fairness benchmarks.

.png)