Reality check: Microsoft Azure CTO pushes back on AI vibe coding hype, sees ‘upper limit’

REDMOND, Wash. — Microsoft Azure CTO Mark Russinovich cautioned that “vibe coding” and AI-driven software development tools aren’t capable of replacing human programmers for complex software projects, contrary to the industry’s most optimistic aspirations for artificial intelligence. Russinovich, giving the keynote Tuesday at a Technology Alliance startup and investor event, acknowledged the effectiveness of AI coding tools for simple web applications, basic database projects, and rapid prototyping, even when used by people with little or no programming experience. However, he said these tools often break down when handling the most complex software projects that span multiple files and folders, and… Read More

REDMOND, Wash. — Microsoft Azure CTO Mark Russinovich cautioned that “vibe coding” and AI-driven software development tools aren’t capable of replacing human programmers for complex software projects, contrary to the industry’s most optimistic aspirations for artificial intelligence.

Russinovich, giving the keynote Tuesday at a Technology Alliance startup and investor event, acknowledged the effectiveness of AI coding tools for simple web applications, basic database projects, and rapid prototyping, even when used by people with little or no programming experience.

However, he said these tools often break down when handling the most complex software projects that span multiple files and folders, and where different parts of the code rely on each other in complicated ways — the kinds of real-world development work that many professional developers tackle daily.

“These things are right now still beyond the capabilities of our AI systems,” he said. “You’re going to see progress made. They’re going to get better. But I think that there’s an upper limit with the way that autoregressive transformers work that we just won’t get past.”

Even five years from now, he predicted, AI systems won’t be independently building complex software on the highest level, or working with the most sophisticated code bases.

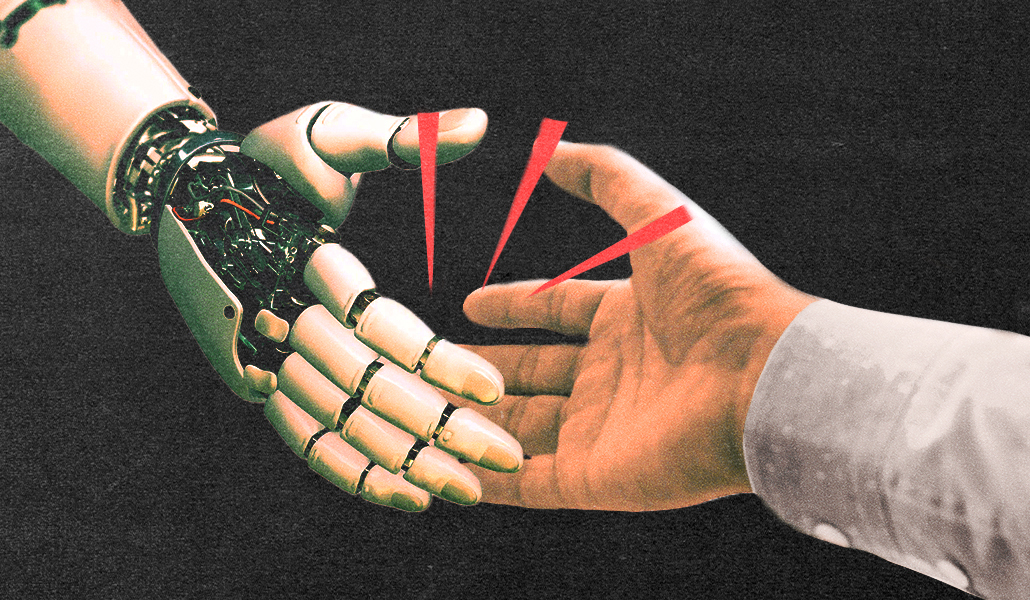

Instead, he said, the future lies in AI-assisted coding, where AI helps developers write code but humans maintain oversight of architecture and complex decision-making. This is more in line with Microsoft’s original vision of AI as a “Copilot,” a term that originated with the company’s GitHub Copilot AI-powered coding assistant.

Russinovich, a longtime Microsoft technical and cloud leader, gave an insider’s overview of the AI landscape, including reasoning models that can think through complex problems before responding; the decline in unit costs for training and running AI models; and the growing importance of small language models that can run efficiently on edge devices.

He described the flip that has taken place in computing resources, from primarily training AI models in the past to now focusing more on AI inference, as usage of artificial intelligence has soared.

He also discussed the emergence of agentic AI systems that can operate autonomously — reflecting a big push this year for Microsoft and other tech giants — as well as AI’s growing contributions to scientific discoveries, such as the newly announced Microsoft Discovery.

But Russinovich, who is also Azure’s chief information security officer, kept gravitating back to AI limitations, offering a healthy reality check overall.

He discussed his own AI safety research, including a technique that he and other Microsoft researchers developed called “crescendo” that can trick AI models into providing information they’d otherwise refuse to give.

The crescendo method works like a “foot in the door” psychological attack, he explained, where someone starts with innocent questions about a forbidden topic and gradually pushes the AI to reveal more detailed information.

Ironically, he noted, the crescendo technique was referenced in a recent research paper that made history as the first largely AI-generated research ever accepted into a tier-one scientific conference.

Russinovich also delved extensively into ongoing AI hallucination problems — showing examples of Google and Microsoft Bing giving incorrect AI-generated answers to questions about the time of day in the Cook Islands, and the current year, respectively.

“AI is very unreliable. That’s the takeaway here,” he said. “And you’ve got to do what you can to control what goes into the model, ground it, and then also verify what comes out of the model.”

Depending on the use case, Russinovich added, “you need to be more rigorous or not, because of the implications of what’s going to happen.”