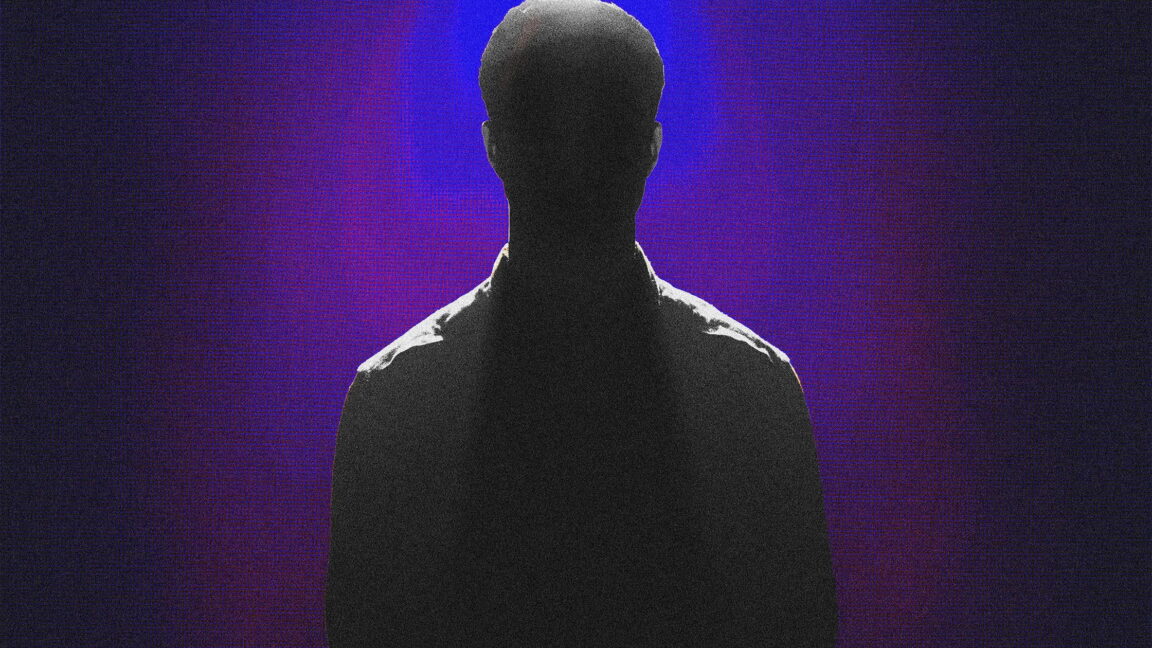

Hugging Face launches humanoid robots to let AI physically interact with the world

Hugging Face debuts two open-source humanoid robots designed to advance embodied AI research with real-world applications.

Hugging Face, the collaborative AI platform known for powering thousands of machine learning models, has entered the world of robotics with the launch of two humanoid robots.

Dubbed LeRobot and Hugbot, the robots are part of a broader initiative to make embodied artificial intelligence—AI that can interact physically with the world—more accessible to researchers and developers.

The announcement, made at the AI Frontiers Research Summit, marks Hugging Face’s first move beyond digital platforms. The robots are designed to serve as testbeds for integrating language models with real-world physical systems, a growing area of interest in the AI research community.

Bridging models with motion

Both robots feature modular hardware, multi-joint limbs, camera-based vision, and compatibility with Hugging Face’s extensive open-source model libraries. They are built for experimentation in tasks such as object manipulation, navigation, gesture learning, and conversational interaction with humans.

Unlike commercial humanoid robots like Tesla’s Optimus or Figure AI’s bipedal bots, Hugging Face’s robots are not designed for mass production or household use. Instead, they serve as platforms for academic and hobbyist communities to explore the interface between language models and real-world behaviour.

Open-source from the ground up

Hugging Face has released detailed documentation, baseline control models, and simulation environments alongside the hardware. Researchers can train the robots using reinforcement Learning, imitation learning, or fine-tuned large language models via Hugging Face’s API infrastructure.

The decision to open-source both the software and hardware schematics aligns with the company’s broader mission to democratise AI development. Already, several research labs and universities have signed up to pilot the robots as part of collaborative research efforts.

Why robotics, and why now?

The move into robotics may seem unexpected for a company best known for hosting text-based AI models like BERT, GPT, and LLaMA. However, interest in embodied AI is growing rapidly, with leading labs exploring how to ground abstract reasoning in physical interaction.

The synergy is clear: large language models offer flexible reasoning and task understanding, while humanoid robots provide the medium to test and refine those capabilities in the real world. The Hugging Face team sees this as a natural evolution of their platform—from pure computation to computational embodiment.

![[Weekly funding roundup May 24-30] Capital inflow continues to remain steady](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/Weekly-funding-1741961216560.jpg)