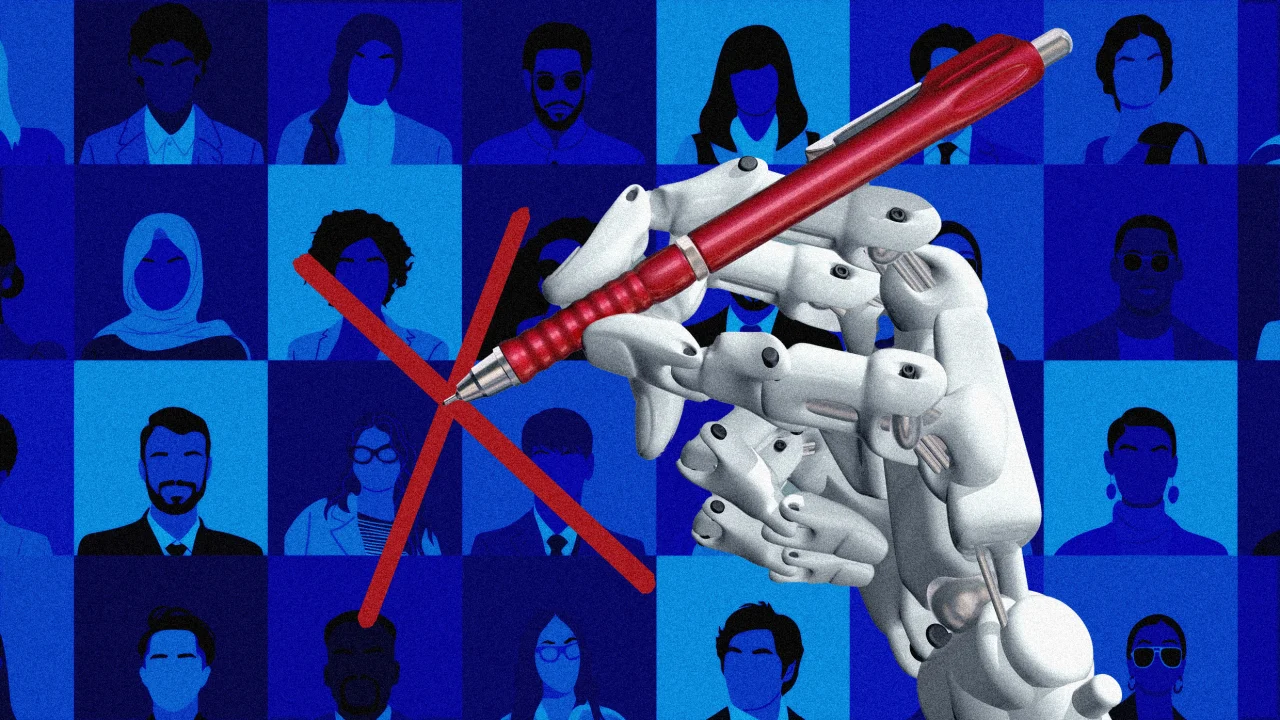

Your AI hiring tool may be rejecting qualified candidates

Like so many lines of business, HR departments are increasingly relying on generative artificial intelligence tools. According to Insight Global’s “2025 AI in Hiring” report, 92% of hiring managers say they are using AI for screening résumés or prescreening interviews and more than half (57%) are using them for skills assessments. However, even as more teams rely on AI, especially for screening early in the hiring process, the cost may be the very talent companies are seeking. A 2021 report by Harvard Business School and Accenture found that applicant tracking systems were screening out good candidates. According to the report, 88% of employers said that qualified, highly skilled candidates were vetted out of the process by their applicant tracking systems because they did not match the exact job description criteria. The percentage for middle-skills workers was even higher (94%). As candidates have a harder time finding jobs and companies still struggle to find great talent, that’s a problem. “I think that many companies have jumped the gun and have implemented some of these tools to help on the operations side, says Hope-Elizabeth Sonam, head of community at marketing firm We Are Rosie. But while recruiters and hiring managers have been looking for productivity improvements, they may not be taking enough time to “make sure that the tools that they’re using are creating a fair, inclusive, whole, human approach to how talent is being scrutinized in the process,” she says. While candidate screening tools do offer help to overwhelmed HR teams, they also need thoughtful implementations—and a few safeguards—to ensure that they’re serving up the most comprehensive list of talent available. Understand the vetting criteria Sonam says that teams must understand how their tools are vetting candidates. “Many end users of these tools . . . don’t understand how the decisions are being made,” she says. “They don’t understand the logic that is behind this machine learning that is, let’s say, scoring their matches a 2 out of 10 fit.” Ask questions about how the tools filter talent, evaluate skills, and perform other functions, she advises. Anoop Gupta, cofounder and CEO of talent sourcing platform SeekOut, advises opting for tools that use semantic match, which derives meaning from language context rather than simply searching for keywords. That way, he says, “you’re not filtering out people and you’re not filtering in people who have just padded their résumé with a variety of keywords.” When you understand such criteria, you can adapt your approach and data to help search for certain skills or experience. Review your training data Eric Sullano, cofounder of JumpSearch, an AI-powered recruitment platform, says that the data used to train the AI screening systems needs to be carefully reviewed and monitored. Some companies may be so focused on “trying to track that magical mix of employees that have been successful at their companies,” that they inadvertently train their screening systems to eliminate people who don’t match those patterns, he adds. So, for example, if a company has hired a number of people from specific universities, the platform may begin to deprioritize candidates who do not match those schools. “They need to be aware of the data that’s being reflected of their current organizations and their current ‘bias,’” Sullano says. That way, they can be aware of areas where they may need to look more broadly at skills or unconventional candidates to find the skills they need. Audit and improve Fine-tuning screening tools to be accurate and inclusive takes time and human intervention, Sonam says. Periodic auditing of data and results is essential to ensure that the criteria and outcomes reflect the organization’s best interest. “It really becomes a data science and HR partnership,” she says. “How can [these tools] be optimized with the whole human in mind if you care about fair and inclusive hiring practices,” she says—not to mention abiding by legal and regulatory issues, as well. Sullano agrees. “The best systems will provide some level of audit capabilities of what is going on with the AI, how it’s making its decisions, what context it has,” he says. Humans need to review that information regularly to ensure that the right decisions are being made and, if not, that changes can be implemented to correct mistakes or eliminate overly rigorous screening that may be costing the organization good candidates. “[If there’s] a gray area, the hiring managers need to be able to have insight into, and a human in the loop. Feedback is going to be very important,” he says. And that’s an area where humans will always need to be involved, Sonam adds.

Like so many lines of business, HR departments are increasingly relying on generative artificial intelligence tools. According to Insight Global’s “2025 AI in Hiring” report, 92% of hiring managers say they are using AI for screening résumés or prescreening interviews and more than half (57%) are using them for skills assessments.

However, even as more teams rely on AI, especially for screening early in the hiring process, the cost may be the very talent companies are seeking. A 2021 report by Harvard Business School and Accenture found that applicant tracking systems were screening out good candidates. According to the report, 88% of employers said that qualified, highly skilled candidates were vetted out of the process by their applicant tracking systems because they did not match the exact job description criteria. The percentage for middle-skills workers was even higher (94%). As candidates have a harder time finding jobs and companies still struggle to find great talent, that’s a problem.

“I think that many companies have jumped the gun and have implemented some of these tools to help on the operations side, says Hope-Elizabeth Sonam, head of community at marketing firm We Are Rosie. But while recruiters and hiring managers have been looking for productivity improvements, they may not be taking enough time to “make sure that the tools that they’re using are creating a fair, inclusive, whole, human approach to how talent is being scrutinized in the process,” she says.

While candidate screening tools do offer help to overwhelmed HR teams, they also need thoughtful implementations—and a few safeguards—to ensure that they’re serving up the most comprehensive list of talent available.

Understand the vetting criteria

Sonam says that teams must understand how their tools are vetting candidates. “Many end users of these tools . . . don’t understand how the decisions are being made,” she says. “They don’t understand the logic that is behind this machine learning that is, let’s say, scoring their matches a 2 out of 10 fit.” Ask questions about how the tools filter talent, evaluate skills, and perform other functions, she advises.

Anoop Gupta, cofounder and CEO of talent sourcing platform SeekOut, advises opting for tools that use semantic match, which derives meaning from language context rather than simply searching for keywords. That way, he says, “you’re not filtering out people and you’re not filtering in people who have just padded their résumé with a variety of keywords.” When you understand such criteria, you can adapt your approach and data to help search for certain skills or experience.

Review your training data

Eric Sullano, cofounder of JumpSearch, an AI-powered recruitment platform, says that the data used to train the AI screening systems needs to be carefully reviewed and monitored. Some companies may be so focused on “trying to track that magical mix of employees that have been successful at their companies,” that they inadvertently train their screening systems to eliminate people who don’t match those patterns, he adds.

So, for example, if a company has hired a number of people from specific universities, the platform may begin to deprioritize candidates who do not match those schools. “They need to be aware of the data that’s being reflected of their current organizations and their current ‘bias,’” Sullano says. That way, they can be aware of areas where they may need to look more broadly at skills or unconventional candidates to find the skills they need.

Audit and improve

Fine-tuning screening tools to be accurate and inclusive takes time and human intervention, Sonam says. Periodic auditing of data and results is essential to ensure that the criteria and outcomes reflect the organization’s best interest. “It really becomes a data science and HR partnership,” she says. “How can [these tools] be optimized with the whole human in mind if you care about fair and inclusive hiring practices,” she says—not to mention abiding by legal and regulatory issues, as well.

Sullano agrees. “The best systems will provide some level of audit capabilities of what is going on with the AI, how it’s making its decisions, what context it has,” he says. Humans need to review that information regularly to ensure that the right decisions are being made and, if not, that changes can be implemented to correct mistakes or eliminate overly rigorous screening that may be costing the organization good candidates.

“[If there’s] a gray area, the hiring managers need to be able to have insight into, and a human in the loop. Feedback is going to be very important,” he says. And that’s an area where humans will always need to be involved, Sonam adds.

![The Most Searched Things on Google [2025]](https://static.semrush.com/blog/uploads/media/f9/fa/f9fa0de3ace8fc5a4de79a35768e1c81/most-searched-keywords-google-sm.png)

![What Is a Landing Page? [+ Case Study & Tips]](https://static.semrush.com/blog/uploads/media/db/78/db785127bf273b61d1f4e52c95e42a49/what-is-a-landing-page-sm.png)