Why we’re measuring AI success all wrong—and what leaders should do about it

Here’s a troubling reality check: We are currently evaluating artificial intelligence in the same way that we’d judge a sports car. We act like an AI model is good if it is fast and powerful. But what we really need to assess is whether it makes for a trusted and capable business partner. The way we approach assessment matters. As AI models begin to play a part in everything from hiring decisions to medical diagnoses, our narrow focus on benchmarks and accuracy rates is creating blind spots that could undermine the very outcomes we’re trying to achieve. In the long term, it is effectiveness, not efficiency, that matters. Think about it: When you hire someone for your team, do you only look at their test scores and the speed they work at? Of course not. You consider how they collaborate, whether they share your values, whether they can admit when they don’t know something, and how they’ll impact your organization’s culture—all the things that are critical to strategic success. Yet when it comes to the technology that is increasingly making decisions alongside us, we’re still stuck on the digital equivalent of standardized test scores. The Benchmark Trap Walk into any tech company today, and you’ll hear executives boasting about their latest performance metrics: “Our model achieved 94.7% accuracy!” or “We reduced token usage by 20%!” These numbers sound impressive, but they tell us almost nothing about whether these systems will actually serve human needs effectively. Despite significant tech advances, evaluation frameworks remain stubbornly focused on performance metrics while largely ignoring ethical, social, and human-centric factors. It’s like judging a restaurant solely on how fast it serves food while ignoring whether the meals are nutritious, safe, or actually taste good. This measurement myopia is leading us astray. Many recent studies have found high levels of bias toward specific demographic groups when AI models are asked to make decisions about individuals in relation to tasks such as hiring, salary recommendations, loan approvals, and sentencing. These outcomes are not just theoretical. For instance, facial recognition systems deployed in law enforcement contexts continue to show higher error rates when identifying people of color. Yet these systems often pass traditional performance tests with flying colors. The disconnect is stark: We’re celebrating technical achievements while people’s lives are being negatively impacted by our measurement blind spots. Real-World Lessons IBM’s Watson for Oncology was once pitched as a revolutionary breakthrough that would transform cancer care. When measured using traditional metrics, the AI model appeared to be highly impressive, processing vast amounts of medical data rapidly and generating treatment recommendations with clinical sophistication. However, as Scientific American reported, reality fell far short of this promise. When major cancer centers implemented Watson, significant problems emerged. The system’s recommendations often didn’t align with best practices, in part because Watson was trained primarily on a limited number of cases from a single institution rather than a comprehensive database of real-world patient outcomes. The disconnect wasn’t in Watson’s computational capabilities—according to traditional performance metrics, it functioned as designed. The gap was in its human-centered evaluation capabilities: Did it improve patient outcomes? Did it augment physician expertise effectively? When measured against these standards, Watson struggled to prove its value, leading many healthcare institutions to abandon the system. Prioritizing dignity Microsoft’s Seeing AI is an example of what happens when companies measure success through a human-centered lens from the beginning. As Time magazine reported, the Seeing AI app emerged from Microsoft’s commitment to accessibility innovation, using computer vision to narrate the visual world for blind and low-vision users. What sets Seeing AI apart isn’t just its technical capabilities but how the development team prioritized human dignity and independence over pure performance metrics. Microsoft worked closely with the blind community throughout the design and testing phases, measuring success not by accuracy percentages alone, but by how effectively the app enhanced the ability of users to navigate their world independently. This approach created technology that genuinely empowers users, providing real-time audio descriptions that help with everything from selecting groceries to navigating unfamiliar spaces. The lesson: When we start with human outcomes as our primary success metric, we build systems that don’t just work—they make life meaningfully better. Five Critical Dimensions of Success Smart leaders are moving beyond traditional metrics to evaluate systems across five critical dimensions: 1. Human-AI Collaboration. Rather than measuring perfor

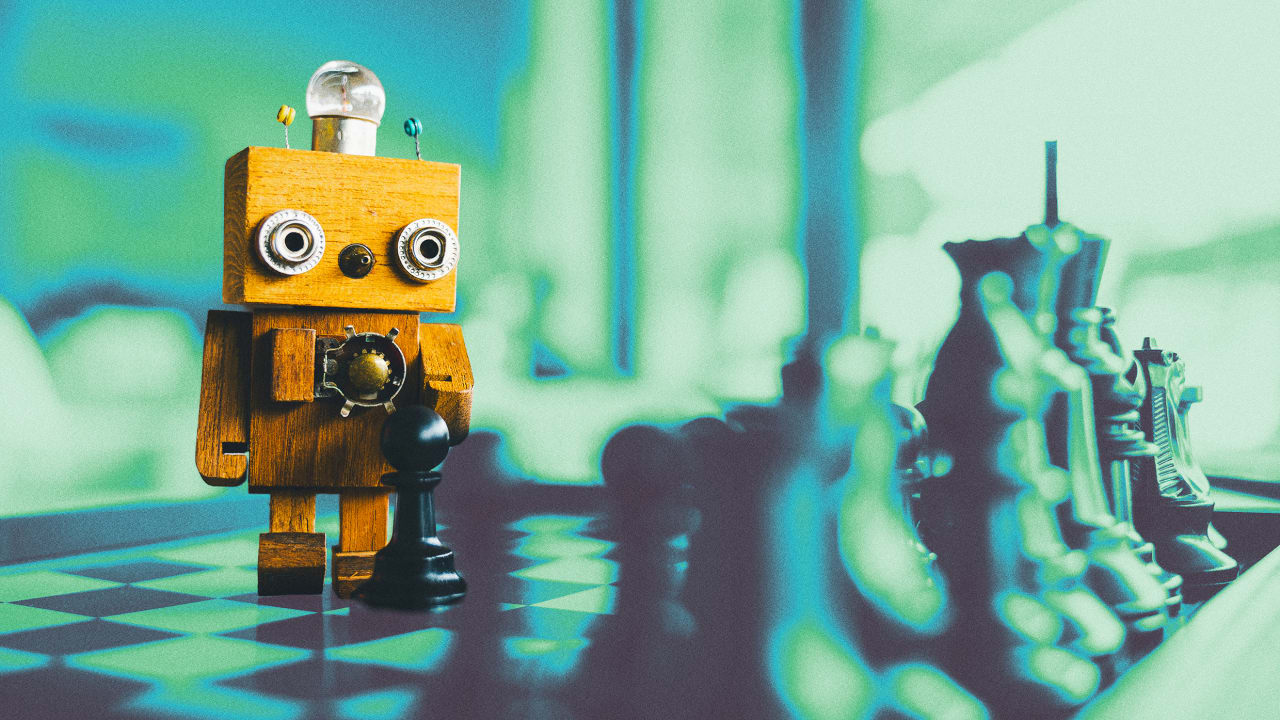

Here’s a troubling reality check: We are currently evaluating artificial intelligence in the same way that we’d judge a sports car. We act like an AI model is good if it is fast and powerful. But what we really need to assess is whether it makes for a trusted and capable business partner.

The way we approach assessment matters. As AI models begin to play a part in everything from hiring decisions to medical diagnoses, our narrow focus on benchmarks and accuracy rates is creating blind spots that could undermine the very outcomes we’re trying to achieve. In the long term, it is effectiveness, not efficiency, that matters.

Think about it: When you hire someone for your team, do you only look at their test scores and the speed they work at? Of course not. You consider how they collaborate, whether they share your values, whether they can admit when they don’t know something, and how they’ll impact your organization’s culture—all the things that are critical to strategic success. Yet when it comes to the technology that is increasingly making decisions alongside us, we’re still stuck on the digital equivalent of standardized test scores.

The Benchmark Trap

Walk into any tech company today, and you’ll hear executives boasting about their latest performance metrics: “Our model achieved 94.7% accuracy!” or “We reduced token usage by 20%!” These numbers sound impressive, but they tell us almost nothing about whether these systems will actually serve human needs effectively.

Despite significant tech advances, evaluation frameworks remain stubbornly focused on performance metrics while largely ignoring ethical, social, and human-centric factors. It’s like judging a restaurant solely on how fast it serves food while ignoring whether the meals are nutritious, safe, or actually taste good.

This measurement myopia is leading us astray. Many recent studies have found high levels of bias toward specific demographic groups when AI models are asked to make decisions about individuals in relation to tasks such as hiring, salary recommendations, loan approvals, and sentencing. These outcomes are not just theoretical. For instance, facial recognition systems deployed in law enforcement contexts continue to show higher error rates when identifying people of color. Yet these systems often pass traditional performance tests with flying colors.

The disconnect is stark: We’re celebrating technical achievements while people’s lives are being negatively impacted by our measurement blind spots.

Real-World Lessons

IBM’s Watson for Oncology was once pitched as a revolutionary breakthrough that would transform cancer care. When measured using traditional metrics, the AI model appeared to be highly impressive, processing vast amounts of medical data rapidly and generating treatment recommendations with clinical sophistication.

However, as Scientific American reported, reality fell far short of this promise. When major cancer centers implemented Watson, significant problems emerged. The system’s recommendations often didn’t align with best practices, in part because Watson was trained primarily on a limited number of cases from a single institution rather than a comprehensive database of real-world patient outcomes.

The disconnect wasn’t in Watson’s computational capabilities—according to traditional performance metrics, it functioned as designed. The gap was in its human-centered evaluation capabilities: Did it improve patient outcomes? Did it augment physician expertise effectively? When measured against these standards, Watson struggled to prove its value, leading many healthcare institutions to abandon the system.

Prioritizing dignity

Microsoft’s Seeing AI is an example of what happens when companies measure success through a human-centered lens from the beginning. As Time magazine reported, the Seeing AI app emerged from Microsoft’s commitment to accessibility innovation, using computer vision to narrate the visual world for blind and low-vision users.

What sets Seeing AI apart isn’t just its technical capabilities but how the development team prioritized human dignity and independence over pure performance metrics. Microsoft worked closely with the blind community throughout the design and testing phases, measuring success not by accuracy percentages alone, but by how effectively the app enhanced the ability of users to navigate their world independently.

This approach created technology that genuinely empowers users, providing real-time audio descriptions that help with everything from selecting groceries to navigating unfamiliar spaces. The lesson: When we start with human outcomes as our primary success metric, we build systems that don’t just work—they make life meaningfully better.

Five Critical Dimensions of Success

Smart leaders are moving beyond traditional metrics to evaluate systems across five critical dimensions:

1. Human-AI Collaboration. Rather than measuring performance in isolation, assess how well humans and technology work together. Recent research in the Journal of the American College of Surgeons showed that AI-generated postoperative reports were only half as likely to contain significant discrepancies as those written by surgeons alone. The key insight: a careful division of labor between humans and machines can improve outcomes while leaving humans free to spend more time on what they do best.

2. Ethical Impact and Fairness. Incorporate bias audits and fairness scores as mandatory evaluation metrics. This means continuously assessing whether systems treat all populations equitably and impact human freedom, autonomy, and dignity positively.

3. Stability and Self-Awareness. A Nature Scientific Reports study found performance degradation over time in 91 percent of the models it tested once they were exposed to real-world data. Instead of just measuring a model’s out-of-the-box accuracy, track performance over time and assess the model’s ability to identify performance dips and escalate to human oversight when its confidence drops.

4. Value Alignment. As the World Economic Forum’s 2024 white paper emphasizes, AI models must operate in accordance with core human values if they are to serve humanity effectively. This requires embedding ethical considerations throughout the technology lifecycle.

5. Long-Term Societal Impact Move beyond narrow optimization goals to assess alignment with long-term societal benefits. Consider how technology affects authentic human connections, preserves meaningful work, and serves the broader community good.

The Leadership Imperative: Detach and Devote

To transform how your organization measures AI success, embrace the “Detach and Devote” paradigm we describe in our book TRANSCEND:

Detach from:

- Narrow efficiency metrics that ignore human impact

- The assumption that replacing human labor is inherently beneficial

- Approaches that treat humans as obstacles to optimization

Devote to:

- Supporting genuine human connection and collaboration

- Preserving meaningful human choice and agency

- Serving human needs rather than reshaping humans to serve technological needs

The Path Forward

Forward-thinking leaders implement comprehensive evaluation approaches by starting with the desired human outcomes and then establishing continuous human input loops and measuring results against the goals of human stakeholders.

The companies that get this right won’t just build better systems—they’ll build more trusted, more valuable, and ultimately more successful businesses. They’ll create technology that doesn’t just process data faster but that genuinely enhances human potential and serves societal needs.

The stakes couldn’t be higher. As these AI models become more prevalent in critical decisions around hiring, healthcare, criminal justice, and financial services, our measurement approaches will determine whether these models serve humanity well or perpetuate existing inequalities.

In the end, the most important test of all is whether using AI for a task makes human lives genuinely better. The question isn’t whether your technology is fast enough but whether it’s human enough. That is the only metric that ultimately matters.

![Is ChatGPT Catching Google on Search Activity? [Infographic]](https://imgproxy.divecdn.com/RMnjJQs1A7VQFmqv9plBlcUp_5Xhm4P_hzsniPsfHiU/g:ce/rs:fit:770:435/Z3M6Ly9kaXZlc2l0ZS1zdG9yYWdlL2RpdmVpbWFnZS9kYWlseV9zZWFyY2hlc19pbmZvZ3JhcGhpYzIucG5n.webp)