Unlocking the potential of dynamic, scalable data workflows with Snowflake

Gen AI has been around for a while, but there have been roadblocks on the path to adoption. The data wasn't production grade; structured, semi-structured and unstructured data could not be combined into one pane; and more.

Fortunately, these pain points are addressed with Snowflake, a cloud-based data platform that serves as a single solution for various data-related tasks like data warehousing, data engineering, and the like.

A hands-on lab workshop on ‘Ingesting, Transforming, and Delivering Data in Snowflake’ at YourStory DevSparks 2025, took participants through a journey from data ingestion to actionable insights.

Led by Deepjyoti Dev, Senior Data Cloud Architect at Snowflake, the session explored real-time data pipelines, efficient processing techniques, and delivery strategies that drive business impact.

Behind the scenes

Data engineering helps to create pipelines, making it more functional, easier to get transformations, and delivery of data sets.

During the workshop, Dev suggested a few tips. For instance, loading data into Snowflake is necessary since it offers users the freedom to do the work across parts, in any language they want.

“We started with SQL but we are great on Python or Javascript. Our goal is to make the platform easy, efficient and trusted. Trust is important and the platform lets you access elements assigned to you,” Dev said.

Data engineers work towards making data more consumable and usable. They also manipulate data format, scale data systems, as well as enforce data quality and security.

Snowflake gives them the feature to work on the observability and the DevOps track. Thanks to the platform, one can also utilise the option of AI ops where the journey of an answer is also visible.

“This is crucial, particularly for compliance. You can't just say AI gave me an answer…here, you can show why it gave you a certain answer,” Dev said.

He spoke about another big problem of the AI ecosystem: hallucination and the fact that it’s “more of a hidden trial”. “Snowflake gives you the ammunition to go into a fight where you know the enemy well. It explains you where it came from and also shows you how it came to it.”

Navigating the journey

The hands-on lab workshop started with batch ingestion and getting the data from a marketplace. In the absence of good data sets to work with, Snowflake has built one of the biggest marketplaces.

“We started off as a data marketplace but we also work as an application marketplace today. You can develop apps, move it to the snowflake ecosystem and deploy from there,” Dev said.

The next step is to build user defined functions using Snowpark data warehousing. The last part focused on Streamlit in Snowflake, allowing users to develop Streamlit applications and deploy them.

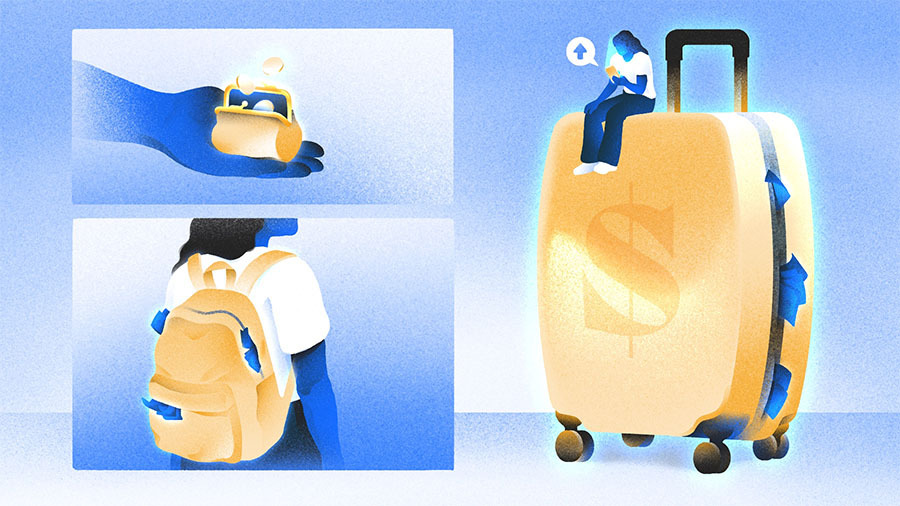

Dev took the participants through an end-to-end journey, taking the example of Tasty Bytes, a food truck company in Hamburg whose sales dropped drastically in a month. The participants had to figure out the reason behind this shift, while building data pipelines that could keep analysts up to date on the weather there.

There were a few steps that helped the participants arrive at a solution, with Dev’s guidance. It started with opening a Snowflake trial account, understanding the pipeline they would build, getting weather data from the Snowflake marketplace, and loading sales data from AWS s3. Other steps included data transformation with SQL, creating user defined functions for calculations, reporting a mistake, and delivering insights with Streamlit in Snowflake.

The process allowed participants to derive insights into daily sales, temperature, and wind speeds. It turned out that high wind speeds deterred people from visiting the food truck. This information could then be shared with colleagues and stakeholders through a link to help keep track.

“We are also now able to do unstructured streaming directly into Snowflake through an ingestion tool called Snowflake Openflow, which can be used real-time. This is a recent development,” says Dev.

![Is ChatGPT Catching Google on Search Activity? [Infographic]](https://imgproxy.divecdn.com/RMnjJQs1A7VQFmqv9plBlcUp_5Xhm4P_hzsniPsfHiU/g:ce/rs:fit:770:435/Z3M6Ly9kaXZlc2l0ZS1zdG9yYWdlL2RpdmVpbWFnZS9kYWlseV9zZWFyY2hlc19pbmZvZ3JhcGhpYzIucG5n.webp)