How can I keep data defragmented and sorted if it's large and often changes randomly?

This problem is very relevant to games and real-time simulations but it may have broader applications. It seems inherently difficult to solve. The problem: Imagine that you have a large buffer of data, maybe 50 to 100 mb (far more than the processor cache can hold at once). The buffer contains many objects that represent things in physical space (2D or 3D). The program performs a lot of geometry related calculations and accesses large areas of data frequently. Specifically it's performing spatial queries and raycasting with objects in space. As the program runs and accesses data, the cache often gets flushed out and has to jump around a lot. Cache misses will be a major problem for performance if not handled properly. The source of the cache misses is diffuse and fluctuates unpredictably. It's not coming from a particular function call or area of memory in any sort of consistent way; instead it's "death by a thousand cuts". Besides fragmentation bloating memory and making things further apart, another potential major cause of cache misses is poor sorting. There is an important order to the objects in memory. In a simulation which takes place in physical space, keeping the objects in memory sorted along a space filling curve (like a Morton curve or Z-curve) significantly reduces cache misses during spatial queries. Morton order outperforms row-major order in the general case where queries are in random directions in space. It keeps objects that are close together in physical space also close together in memory. To give a more tangible example, consider this animation of the buffer in a simulation with people, trees and the ground (the program is trying to create some people at Morton order 0 but it has to move stuff to make room; this will be described in more detail below): Regarding the 3 types of objects in the simulation: The ground doesn't really change, and it's the most plentiful type of object (probably a bunch of triangle meshes). It doesn't move either so it doesn't need to be sorted to respect Morton order. And it doesn't query anything. Trees change infrequently but occasionally one might fall over or get chopped down by a person. Perhaps a new tree could grow somewhere too. Like the ground, trees don't move so sorting isn't an issue. They also don't perform queries. People are the least numerous type of object but they change a lot. They can enter the area (get created) and also leave it or possibly die too (get destroyed). They move around the environment, so periodically you need to move their objects in memory so it matches their physical locations (respects Morton order). This way when they look around (raycasting) or perform other spatial queries, the objects they'll encounter in space will also be close to them in memory (so they can access them with minimal cache misses). Brainstorming solutions: If you group objects by Morton order in memory, and reserve free space between these groups, it becomes easier to add, remove, or sort objects in memory due to the free space (this is analogous to reserving space in vectors to avoid re-allocating frequently). But the extra space between everything generally increases cache misses which is not good. If you reserve very little or zero space between groups (as you can see in the animation), you get better cache performance during queries, but adding, removing and sorting becomes more difficult. When dealing with dozens or hundreds of mb worth of data, moving huge blocks to create a free spot is prohibitively expensive. The typical way to do this is to send free space to an area as a "pulse" or "bubble" that travels through the data by moving a couple of memory blocks at a time (each time step or frame). An object has to wait in a temporary buffer until the bubble arrives and creates a free spot. Accessing an object in this temporary buffer is expensive and causes cache misses (because it's far away from everything else in memory). But once the bubble arrives at its destination and a properly sorted spot becomes available, the object moves there and accessing it becomes efficient again. But if data changes frequently this can get very messy. A memory bubble might be halfway to its destination, only to find out that the object waiting in the temporary buffer has been destroyed. Or maybe the object waiting in the temporary buffer has moved in physical space, and now it needs to be re-sorted to a different memory location (so the bubble is now going the wrong way). These solutions are starting to seem overly complex and unnecessarily hard to debug and maintain. Given the problem described above, what defragmenting and sorting algorithm(s) would be suitable? Note: I'm aware of entity component systems as a solution to cache locality for these kinds of applications but I'm curious about a more traditional object-oriented approach that still achieves good locality. I find it very easy to reason about objects, especially when their locations in memory

This problem is very relevant to games and real-time simulations but it may have broader applications. It seems inherently difficult to solve.

The problem:

Imagine that you have a large buffer of data, maybe 50 to 100 mb (far more than the processor cache can hold at once). The buffer contains many objects that represent things in physical space (2D or 3D). The program performs a lot of geometry related calculations and accesses large areas of data frequently. Specifically it's performing spatial queries and raycasting with objects in space.

As the program runs and accesses data, the cache often gets flushed out and has to jump around a lot. Cache misses will be a major problem for performance if not handled properly. The source of the cache misses is diffuse and fluctuates unpredictably. It's not coming from a particular function call or area of memory in any sort of consistent way; instead it's "death by a thousand cuts".

Besides fragmentation bloating memory and making things further apart, another potential major cause of cache misses is poor sorting. There is an important order to the objects in memory. In a simulation which takes place in physical space, keeping the objects in memory sorted along a space filling curve (like a Morton curve or Z-curve) significantly reduces cache misses during spatial queries. Morton order outperforms row-major order in the general case where queries are in random directions in space. It keeps objects that are close together in physical space also close together in memory.

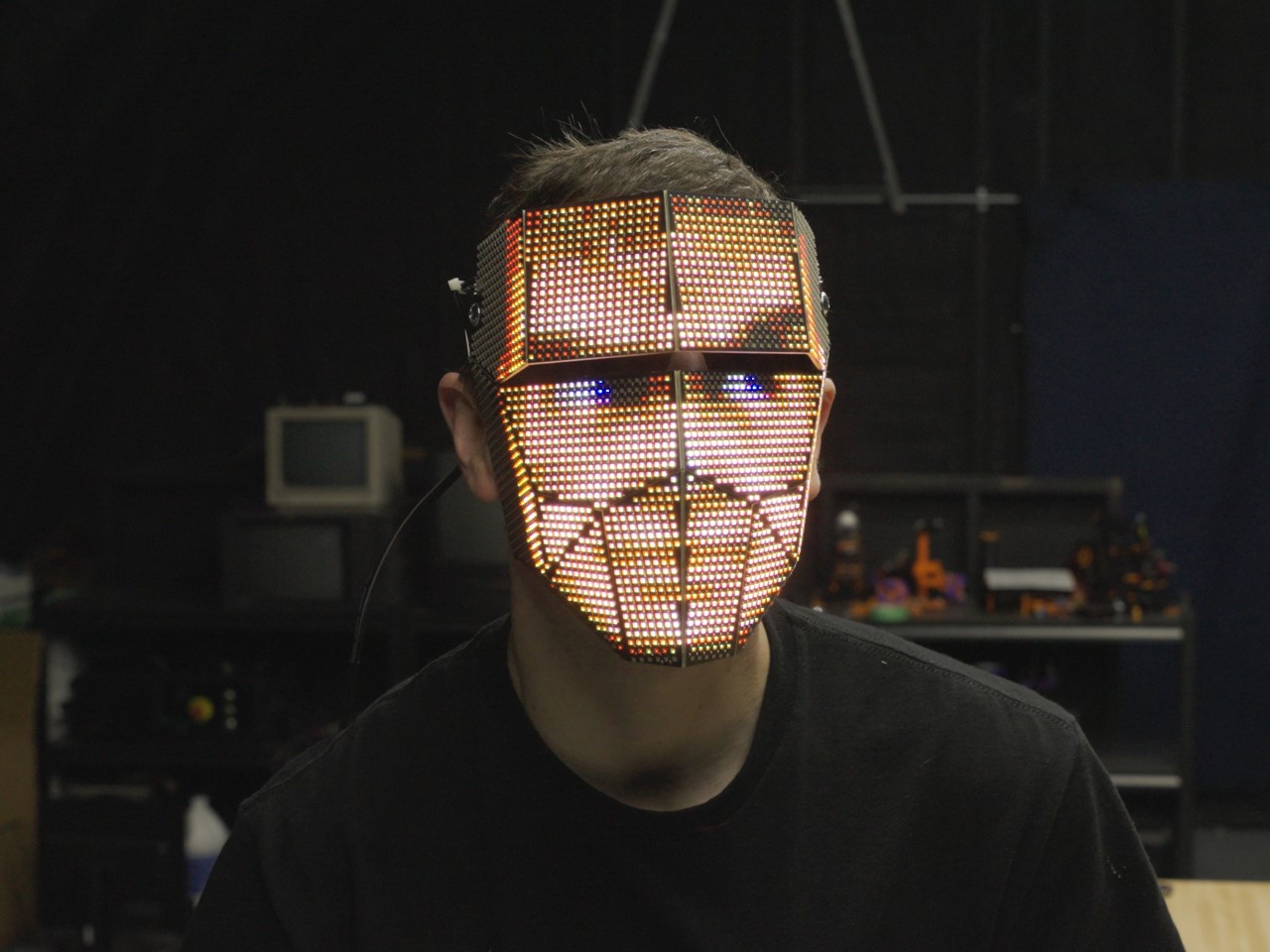

To give a more tangible example, consider this animation of the buffer in a simulation with people, trees and the ground (the program is trying to create some people at Morton order 0 but it has to move stuff to make room; this will be described in more detail below):

Regarding the 3 types of objects in the simulation:

The ground doesn't really change, and it's the most plentiful type of object (probably a bunch of triangle meshes). It doesn't move either so it doesn't need to be sorted to respect Morton order. And it doesn't query anything.

Trees change infrequently but occasionally one might fall over or get chopped down by a person. Perhaps a new tree could grow somewhere too. Like the ground, trees don't move so sorting isn't an issue. They also don't perform queries.

People are the least numerous type of object but they change a lot. They can enter the area (get created) and also leave it or possibly die too (get destroyed). They move around the environment, so periodically you need to move their objects in memory so it matches their physical locations (respects Morton order). This way when they look around (raycasting) or perform other spatial queries, the objects they'll encounter in space will also be close to them in memory (so they can access them with minimal cache misses).

Brainstorming solutions:

If you group objects by Morton order in memory, and reserve free space between these groups, it becomes easier to add, remove, or sort objects in memory due to the free space (this is analogous to reserving space in vectors to avoid re-allocating frequently). But the extra space between everything generally increases cache misses which is not good.

If you reserve very little or zero space between groups (as you can see in the animation), you get better cache performance during queries, but adding, removing and sorting becomes more difficult. When dealing with dozens or hundreds of mb worth of data, moving huge blocks to create a free spot is prohibitively expensive. The typical way to do this is to send free space to an area as a "pulse" or "bubble" that travels through the data by moving a couple of memory blocks at a time (each time step or frame). An object has to wait in a temporary buffer until the bubble arrives and creates a free spot. Accessing an object in this temporary buffer is expensive and causes cache misses (because it's far away from everything else in memory). But once the bubble arrives at its destination and a properly sorted spot becomes available, the object moves there and accessing it becomes efficient again.

But if data changes frequently this can get very messy. A memory bubble might be halfway to its destination, only to find out that the object waiting in the temporary buffer has been destroyed. Or maybe the object waiting in the temporary buffer has moved in physical space, and now it needs to be re-sorted to a different memory location (so the bubble is now going the wrong way). These solutions are starting to seem overly complex and unnecessarily hard to debug and maintain.

Given the problem described above, what defragmenting and sorting algorithm(s) would be suitable?

Note: I'm aware of entity component systems as a solution to cache locality for these kinds of applications but I'm curious about a more traditional object-oriented approach that still achieves good locality. I find it very easy to reason about objects, especially when their locations in memory map to their physical locations in space.

What's Your Reaction?

![Depth of Knowledge and leading PD [NAESP]](http://dangerouslyirrelevant.org/wp-content/uploads/2025/01/NAESP-Logo-Square-2.jpg)