Create Portrait Mode Effect with Segment Anything Model 2 (SAM2)

Have you ever admired how smartphone cameras isolate the main subject from the background, adding a subtle blur to the background based on depth? This “portrait mode” effect gives photographs a professional look by simulating shallow depth-of-field similar to DSLR cameras. In this tutorial, we’ll recreate this effect programmatically using open-source computer vision models, like […] The post Create Portrait Mode Effect with Segment Anything Model 2 (SAM2) appeared first on MarkTechPost.

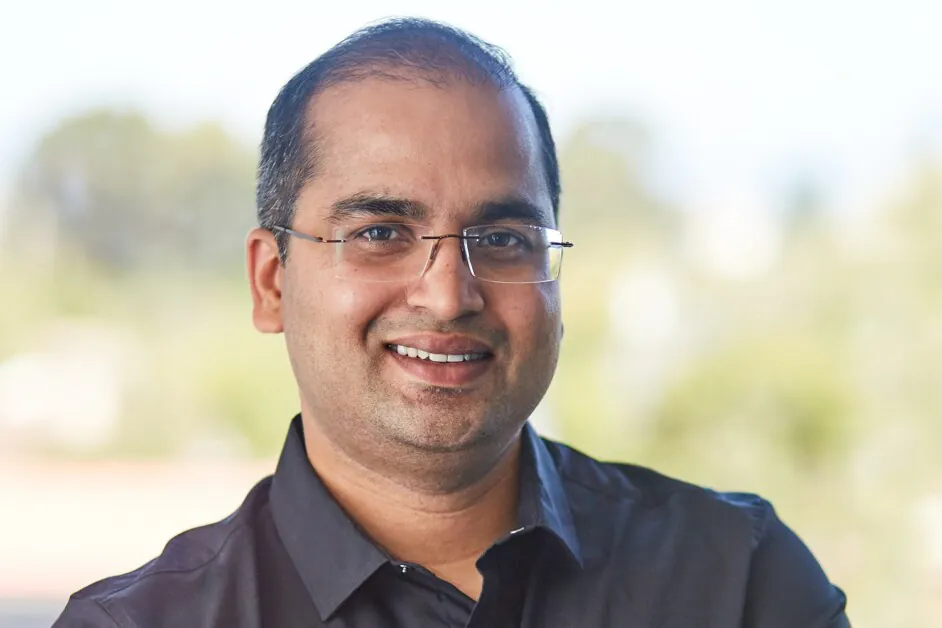

Have you ever admired how smartphone cameras isolate the main subject from the background, adding a subtle blur to the background based on depth? This “portrait mode” effect gives photographs a professional look by simulating shallow depth-of-field similar to DSLR cameras. In this tutorial, we’ll recreate this effect programmatically using open-source computer vision models, like SAM2 from Meta and MiDaS from Intel ISL.

Tools and Frameworks

To build our pipeline, we’ll use:

- Segment Anything Model (SAM2): To segment objects of interest and separate the foreground from the background.

- Depth Estimation Model: To compute a depth map, enabling depth-based blurring.

- Gaussian Blur: To blur the background with intensity varying based on depth.

Step 1: Setting Up the Environment

To get started, install the following dependencies:

pip install matplotlib samv2 pytest opencv-python timm pillowStep 2: Loading a Target Image

Choose a picture to apply this effect and load it into Python using the Pillow library.

from PIL import Image

import numpy as np

import matplotlib.pyplot as plt

image_path = ".jpg"

img = Image.open(image_path)

img_array = np.array(img)

# Display the image

plt.imshow(img)

plt.axis("off")

plt.show() Step 3: Initialize the SAM2

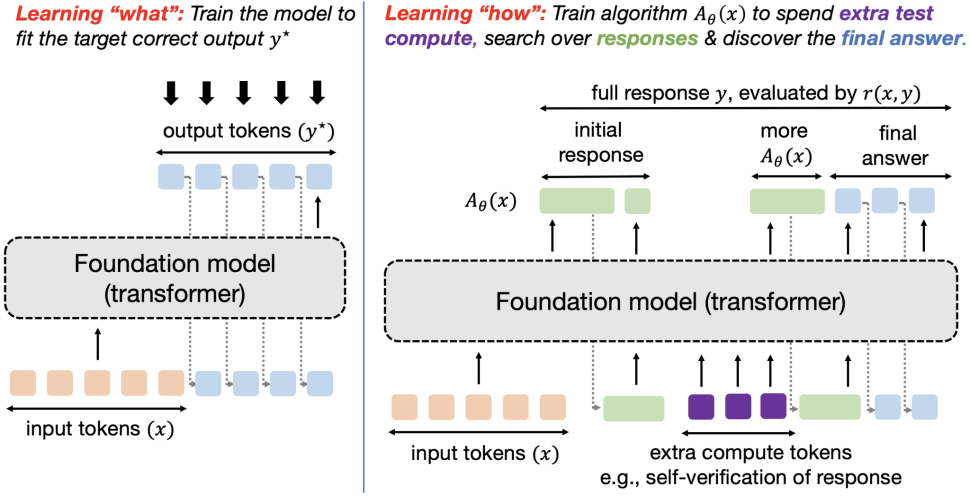

To initialize the model, download the pretrained checkpoint. SAM2 offers four variants based on performance and inference speed: tiny, small, base_plus, and large. In this tutorial, we’ll use tiny for faster inference.

Download the model checkpoint from: https://dl.fbaipublicfiles.com/segment_anything_2/072824/sam2_hiera_ Replace Set the image in SAM and provide points that lie on the subject you want to isolate. SAM predicts a binary mask of the subject and background.

For depth estimation, we use MiDaS by Intel ISL. Similar to SAM, you can choose different variants based on accuracy and speed.Note: The predicted depth map is reversed, meaning larger values correspond to closer objects. We’ll invert it in the next step for better intuitiveness.

Here we optimize the depth-based blurring using an iterative Gaussian blur approach. Instead of applying a single large kernel, we apply a smaller kernel multiple times for pixels with higher depth values.

Finally, use the SAM mask to extract the sharp foreground and combine it with the blurred background.

With just a few tools, we’ve recreated the portrait mode effect programmatically. This technique can be extended for photo editing applications, simulating camera effects, or creative projects.

Resources:

The post Create Portrait Mode Effect with Segment Anything Model 2 (SAM2) appeared first on MarkTechPost. from sam2.build_sam import build_sam2

from sam2.sam2_image_predictor import SAM2ImagePredictor

from sam2.utils.misc import variant_to_config_mapping

from sam2.utils.visualization import show_masks

model = build_sam2(

variant_to_config_mapping["tiny"],

"sam2_hiera_tiny.pt",

)

image_predictor = SAM2ImagePredictor(model)Step 4: Feed Image into SAM and Select the Subject

image_predictor.set_image(img_array)

input_point = np.array([[2500, 1200], [2500, 1500], [2500, 2000]])

input_label = np.array([1, 1, 1])

masks, scores, logits = image_predictor.predict(

point_coords=input_point,

point_labels=input_label,

box=None,

multimask_output=True,

)

output_mask = show_masks(img_array, masks, scores)

sorted_ind = np.argsort(scores)[::-1]Step 5: Initialize the Depth Estimation Model

import torch

import torchvision.transforms as transforms

model_type = "DPT_Large" # MiDaS v3 - Large (highest accuracy)

# Load MiDaS model

model = torch.hub.load("intel-isl/MiDaS", model_type)

model.eval()

# Load and preprocess image

transform = torch.hub.load("intel-isl/MiDaS", "transforms").dpt_transform

input_batch = transform(img_array)

# Perform depth estimation

with torch.no_grad():

prediction = model(input_batch)

prediction = torch.nn.functional.interpolate(

prediction.unsqueeze(1),

size=img_array.shape[:2],

mode="bicubic",

align_corners=False,

).squeeze()

prediction = prediction.cpu().numpy()

# Visualize the depth map

plt.imshow(prediction, cmap="plasma")

plt.colorbar(label="Relative Depth")

plt.title("Depth Map Visualization")

plt.show()Step 6: Apply Depth-Based Gaussian Blur

import cv2

def apply_depth_based_blur_iterative(image, depth_map, base_kernel_size=7, max_repeats=10):

if base_kernel_size % 2 == 0:

base_kernel_size += 1

# Invert depth map

depth_map = np.max(depth_map) - depth_map

# Normalize depth to range [0, max_repeats]

depth_normalized = cv2.normalize(depth_map, None, 0, max_repeats, cv2.NORM_MINMAX).astype(np.uint8)

blurred_image = image.copy()

for repeat in range(1, max_repeats + 1):

mask = (depth_normalized == repeat)

if np.any(mask):

blurred_temp = cv2.GaussianBlur(blurred_image, (base_kernel_size, base_kernel_size), 0)

for c in range(image.shape[2]):

blurred_image[..., c][mask] = blurred_temp[..., c][mask]

return blurred_image

blurred_image = apply_depth_based_blur_iterative(img_array, prediction, base_kernel_size=35, max_repeats=20)

# Visualize the result

plt.figure(figsize=(20, 10))

plt.subplot(1, 2, 1)

plt.imshow(img)

plt.title("Original Image")

plt.axis("off")

plt.subplot(1, 2, 2)

plt.imshow(blurred_image)

plt.title("Depth-based Blurred Image")

plt.axis("off")

plt.show()Step 7: Combine Foreground and Background

def combine_foreground_background(foreground, background, mask):

if mask.ndim == 2:

mask = np.expand_dims(mask, axis=-1)

return np.where(mask, foreground, background)

mask = masks[sorted_ind[0]].astype(np.uint8)

mask = cv2.resize(mask, (img_array.shape[1], img_array.shape[0]))

foreground = img_array

background = blurred_image

combined_image = combine_foreground_background(foreground, background, mask)

plt.figure(figsize=(20, 10))

plt.subplot(1, 2, 1)

plt.imshow(img)

plt.title("Original Image")

plt.axis("off")

plt.subplot(1, 2, 2)

plt.imshow(combined_image)

plt.title("Final Portrait Mode Effect")

plt.axis("off")

plt.show()Conclusion

Future Enhancements:

What's Your Reaction?

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)